According to BBC, a long-term artificial intelligence (AI) research project led by Facebook could aid in answering the age-old question, “Where did I put that thing?.”

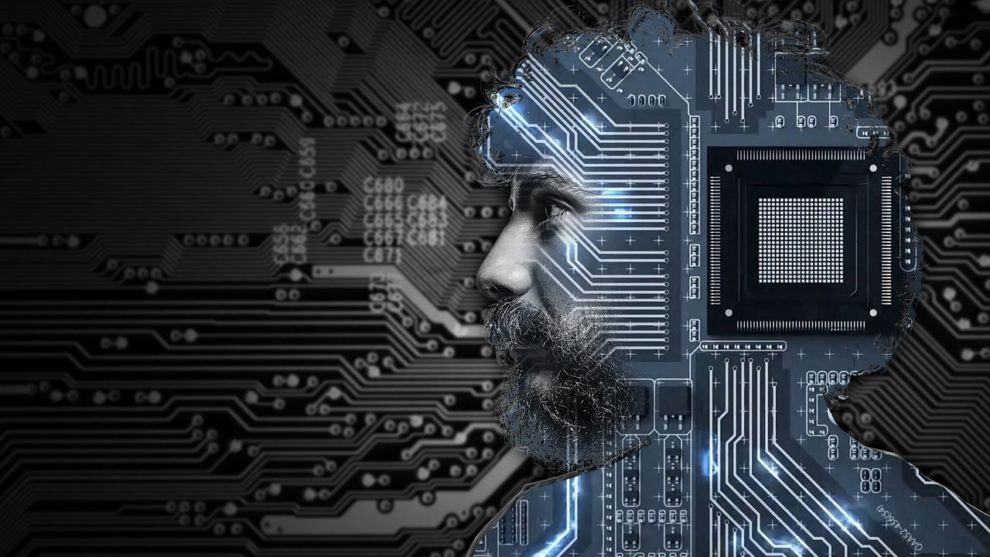

Facebook is working on a new artificial intelligence (AI)-based system that can analyse your lives through first-person videos, recording what they see, do, and hear to assist you with daily tasks.

Imagine your AR device showing you exactly how to hold the sticks during a drum lesson, guiding you through a recipe, locating your misplaced keys, or recalling memories as holograms that come to life in front of you.

Facebook AI has announced ‘Ego4D,’ a long-term project aimed at solving research challenges in ‘egocentric perception’ (the perception of direction or position of oneself based on visual information).

“We brought together a consortium of 13 universities and labs across nine countries, who collected more than 2,200 hours of first-person video in the wild, featuring over 700 participants going about their daily lives,” the social network said in a statement.

This significantly expands the scale of egocentric data publicly available to the research community by an order of magnitude, with more than 20x the hours of footage of any other data set.

“Next-generation AI systems will need to learn from an entirely different kind of data — videos that show the world from the center of the action, rather than the sidelines,” said Kristen Grauman, lead research scientist at Facebook.

Facebook AI has developed five benchmark challenges centred on first-person visual experience in collaboration with the consortium and Facebook Reality Labs Research (FRL Research) that will spur advancements toward real-world applications for future AI assistants.

The five benchmarks of Ego4D are episodic memory, forecasting, hand and object manipulation, audio-visual “diarization,” and social interaction.

“These benchmarks will catalyse research on the building blocks necessary to develop smarter AI assistants that can understand and interact not just in the real world but also in the metaverse, where physical reality, AR, and VR all come together in a single space,” Facebook elaborated.

The data sets will be made public in November of this year for researchers who sign the Ego4D data use agreement.

In addition to this work, FRL researchers used Vuzix Blade smart glasses to collect 400 hours of first-person video data in staged environments. This information will also be made public.

While people can easily relate to both first- and third-person perspectives, AI today does not share that level of comprehension.

“For AI systems to interact with the world the way we do, the AI field needs to evolve to an entirely new paradigm of first-person perception,” Grauman said. “That means teaching AI to understand daily life activities through human eyes in the context of real-time motion, interaction, and multi-sensory observations.”